3 New AI-Powered Features That Make Google Lens “Smarter” Than Before

by islam ahmed

At this year’s Google I/O, the search giant made a host of announcements regarding their AI-powered visual recognition app Google Lens.

As a result, Google Lens will be integrated into the camera app on supported devices from LG, Motorola, Xiaomi, Sony, Nokia, etc., and of course, Pixel. Here are some more useful changes:

Google Lens New Features

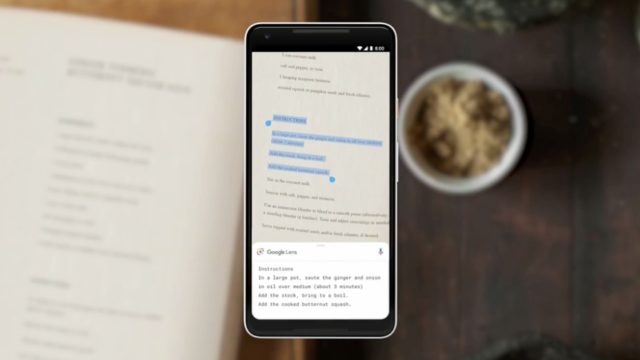

Smart Text Selection:

Google Lens can now recognize text and understand words when you point the camera at some writing. This new Google Lens feature allows users to copy and paste text directly from the real world. For instance, it can be anything from business cards, notes, WiFi passwords, recipes, and more.

The smart text selection feature also throws relevant information for the text selected. For instance, you can know the details of a weirdly named dish at a fancy restaurant without embarrassing yourself.

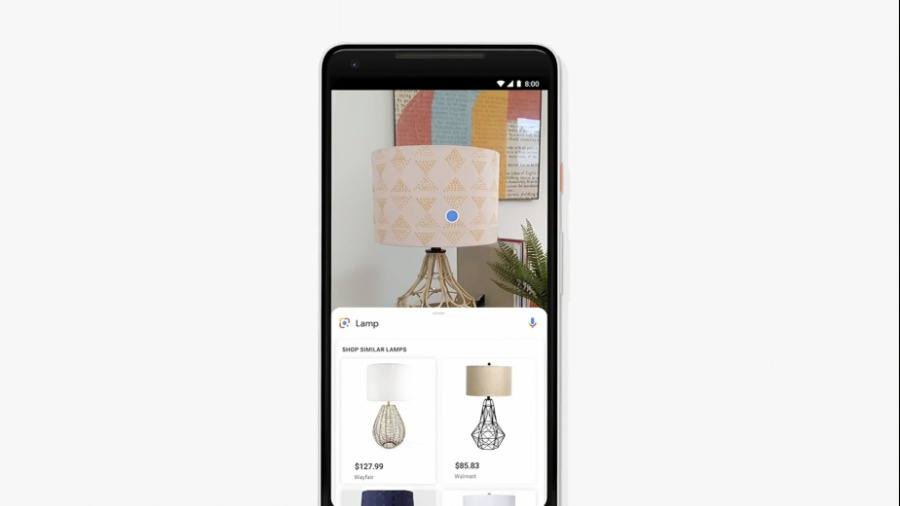

Style Match

When pointing the camera at something, Google Lens can help users who want to find matching stuff and relevant information. For instance, if you point the camera over a table, Lens can throw similar results from the web.

Results in real-time

Google is also working to make sure Google Lens can throw suggestions as the user points the camera on objects, without even tapping the object. To accomplish this, they have leveraged on-device machine learning and the power of Cloud TPUs.

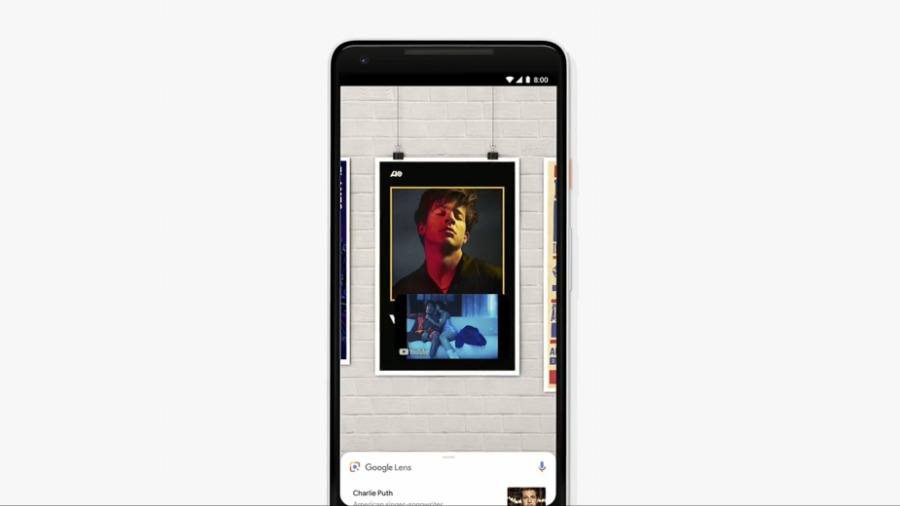

Their future plans include putting virtual overlays on different objects. So, when a user views it through Google Lens, pre-defined actions happen. For instance, a video would start playing when the camera is pointed at a song poster.

All of these Google Lens features will be pushed to users over the next few weeks.

Don’t forget to check out exciting stuff from Google I/O.

No comments:

Post a Comment